The 2021 Nobel Prize and the Trend of Economic Thinking

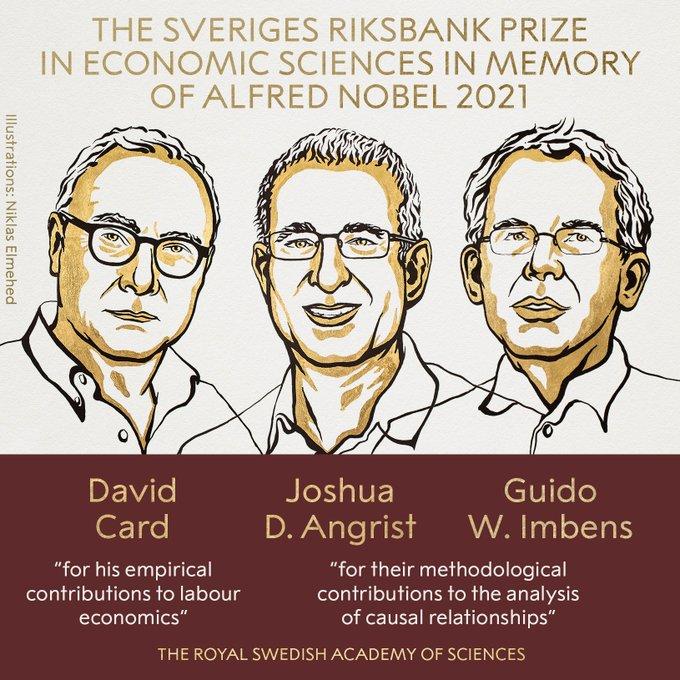

The 2021 Nobel Prize in Economics has been awarded to Berkeley’s David Card, MIT’s Josh Angrist, and Stanford’s Guido Imbens for their work on “natural experiments,” a currently fashionable approach to estimating the causal impact of one economic variable on another. Card, of course, became famous in and outside the profession for his 1994 paper with the late Alan Krueger on the minimum wage. Card and Krueger eschewed the conventional, supply-and-demand analysis of the minimum wage (which predicts that, other things equal, increasing the minimum wage leads to increased unemployment) in favor of an atheoretical, empirical exercise. They compared the change in fast-food restaurant employment in New Jersey, which increased its state minimum wage, to that in neighboring Pennsylvania, which didn’t, and found no substantial differences, concluding that — contrary to the conventional wisdom among economists — minimum wages do not price low-productivity workers out of the labor market.

While the details of the Card-Krueger study are widely disputed (to put it politely), the empirical approach they championed is not. Their work helped usher in what has been called the “credibility revolution” or “identification revolution” in applied microeconomics (also called the “design-based approach” as opposed to the older, “model-based approach”). Angrist and Imbens developed econometric techniques for estimating the “treatment effects” that are central to this approach. Unlike laboratory experiments in which subjects can be assigned randomly to treatment and control groups, with the experimenter holding all other conditions constant, observational studies require statistical tricks to satisfy the “ceteris paribus” conditions. Developing and applying these tricks has been the main focus of mainstream applied economics over the last three decades.

Despite its popularity, this approach is not without its critics. To Austrians, causality in social science is a theoretical construct, not something that can be teased out of the data without some a priori understanding of human behavior and how it affects (and is affected by) economic and social phenomena. Experimental and quasi-experimental methods may provide some limited historical-empirical insight but tend to lack “external validity,” i.e., one never knows if the results hold up in other settings. There are plenty of mainstream critics of the overuse of natural experiments, field experiments (randomized-controlled trials), and the overemphasis on identification over importance (George Akerlof calls this a bias toward “hardness”).

More generally, the newer approaches herald a declining interest in theory in favor of what might be called crude empiricism — “crude” not in the sense that the empirical methods are unsophisticated, but meaning that the underlying questions are simple, “does this x affect this y” questions that rarely involve economic ideas, constructs, or relationships at all. I have previously written about the move away from economic questions and toward issues that are “small” in the sense that they don’t involve economic principles at all. The decreasing popularity of theory corresponds to the naive belief that science, as Lord Kelvin famously put it, is all about measurement and that the data somehow “speak for themselves” — when in reality, empirical data are useful only in the way they are interpreted by thinking, choosing, acting human beings.